How Generative AI in DevOps is Changing Cloud Automation and Security

Modern DevOps teams are under pressure to release software faster, maintain airtight security, and minimize cloud waste—all while managing increasingly complex systems. Generative AI in DevOps introduces a new level of intelligence and speed, giving teams tools to generate code, detect vulnerabilities, and streamline operations automatically.

This shift doesn’t just impact developers and SREs—it also changes how CMOs plan and execute digital strategies. Faster releases, fewer outages, and more scalable infrastructure all lead to improved campaign execution, stronger customer experiences, and measurable business outcomes.

Why DevOps Needs Generative AI Now More Than Ever

Traditional automation and scripting can’t keep pace with modern DevOps demands. Teams are bogged down by repetitive tasks like writing infrastructure code, configuring pipelines, and manually triaging errors. These tasks slow delivery and increase the chance of human error.

Generative AI addresses these bottlenecks. It can generate infrastructure-as-code templates, automate test writing, suggest CI/CD optimizations, and identify misconfigurations before they cause failures.

For CMOs, this translates to faster time-to-market for digital campaigns. For technical leads, it means more stable systems and more time spent on strategic initiatives. The use of generative AI in DevOps is no longer experimental—it’s becoming a necessity.

Core Benefits of Generative AI for Cloud Automation and Security

The practical impact of using AI in DevOps can be seen in five core areas that affect every organization: speed of delivery, pipeline intelligence, proactive security, developer output, and cost control.

Faster and More Reliable Infrastructure Provisioning

Infrastructure provisioning used to mean writing Terraform or CloudFormation scripts by hand. Now, generative AI models can create these scripts based on simple prompts like “Deploy a secure Kubernetes cluster in AWS.”

They don’t just write the code—they also validate it against internal policies, reducing setup time and eliminating configuration drift. Provisioning becomes repeatable, auditable, and error-free.

Smarter CI/CD Pipelines

Using AI in DevOps pipelines brings decision-making into the release process. AI can:

- Analyze the risk of a code change based on commit history

- Suggest test coverage improvements

- Dynamically adjust pipeline stages based on success rates or resource usage

This leads to faster, more predictable delivery—with fewer failed deployments or hotfixes.

Early Detection and Prevention of Security Risks

AI can continuously scan your infrastructure, configs, and codebases for vulnerabilities. It uses machine learning to identify risks earlier in the development cycle—sometimes before code is merged.

For example, it can detect overly permissive IAM roles, outdated dependencies, or suspicious behavior in logs. This improves the security posture without slowing down development.

Significant Gains in Developer Productivity

Developers often spend too much time on boilerplate, debugging, and documentation. AI assistants can:

- Generate reusable components

- Autocomplete code with context-specific suggestions

- Write test cases or update outdated documentation

This frees up developers to focus on business logic and innovation. The productivity gains also support CMOs, who rely on engineering to roll out customer-facing features quickly.

Cost Optimization at Scale

AI tools can analyze cloud spending and suggest ways to reduce waste. These include:

- Recommending instance rightsizing

- Shutting down idle resources

- Identifying underutilized services

For large organizations, this can lead to millions in savings. For marketing leaders, it's a way to reallocate budget to growth instead of overhead.

How Generative AI Is Embedded in the DevOps Lifecycle

Rather than functioning as a bolt-on tool, generative AI is increasingly embedded across each phase of the DevOps lifecycle. Its presence is felt from the moment infrastructure is defined to how applications are monitored and maintained in production.

Infrastructure as Code (IaC) Creation and Validation

AI models trained on thousands of IaC patterns can generate secure and compliant configurations automatically. They also flag anti-patterns or deprecated practices before deployment.

This improves velocity and reduces risk, especially for teams that manage multi-cloud or hybrid environments.

Automated Testing and Monitoring

AI can auto-generate tests based on recent code changes, user flows, or bug reports. It can also identify gaps in test coverage and recommend high-priority areas to monitor.

In production, AI-enhanced observability tools surface anomalies faster—often predicting failures before they happen. This reduces incident duration and improves customer experience.

Continuous Integration and Deployment Enhancements

CI/CD tools that use AI can evaluate the risk of a deployment based on:

- Who wrote the code

- What files were changed

- How similar changes performed historically

Based on this, the pipeline can automatically apply canary deployments, delay risky merges, or trigger rollbacks. It’s a smart layer that optimizes delivery for safety and speed.

AI for DevSecOps

AI-integrated DevSecOps tools scan code, containers, and infrastructure for compliance and vulnerabilities as part of the development process—not after deployment.

They can flag secrets, enforce encryption standards, and even recommend fixes. This proactive model helps organizations maintain security without delaying releases.

How to Implement Generative AI in Your DevOps Stack

Bringing generative AI into your DevOps stack doesn’t require a full rebuild—but it does take a strategic rollout. The goal is to start small, integrate thoughtfully, and expand as the value becomes clear.

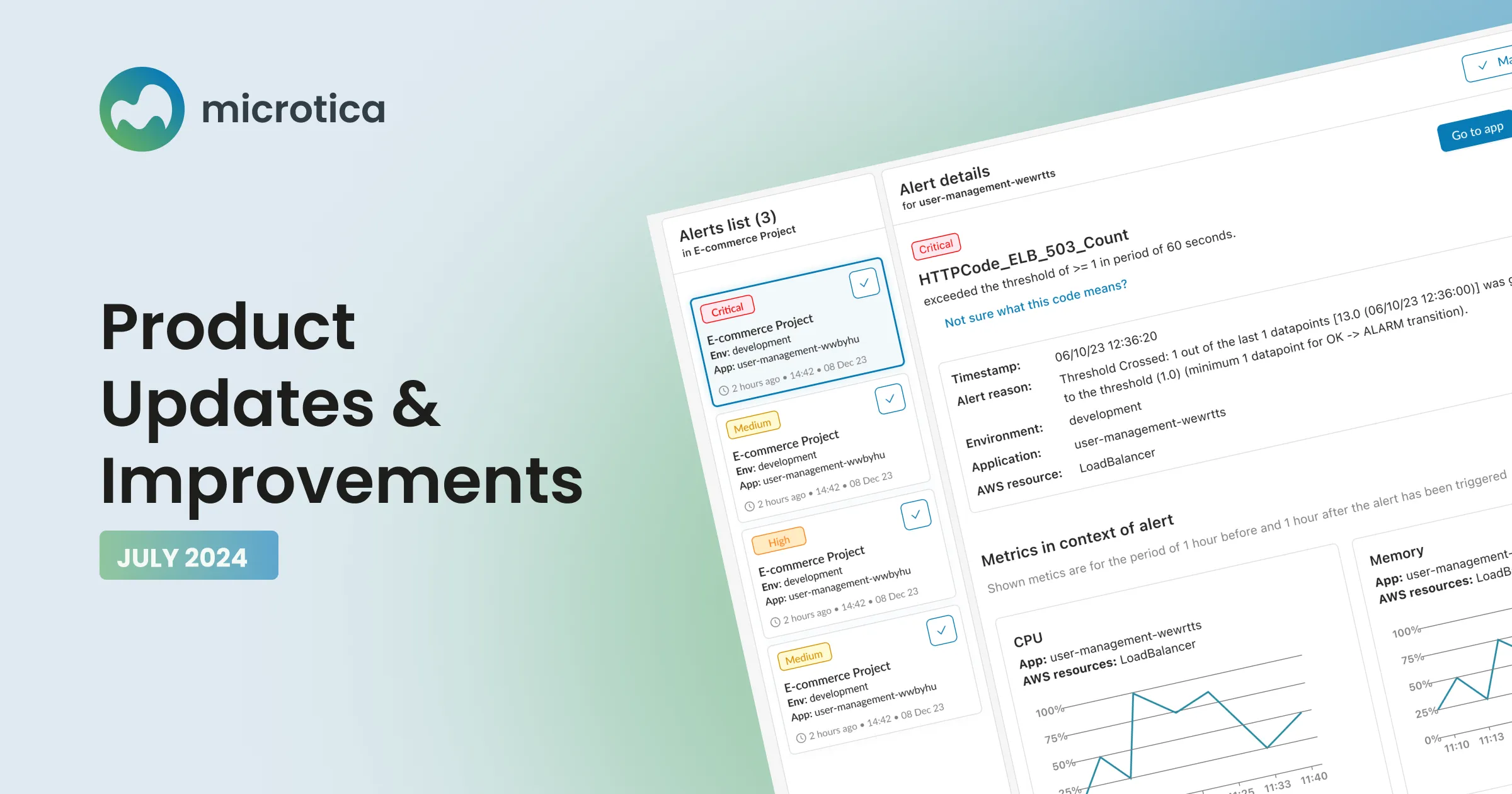

Use AI-Native Platforms

Platforms like Microtica come with built-in generative AI features designed specifically for DevOps use cases. They help:

- Generate infrastructure blueprints

- Recommend cost optimizations

- Automate compliance checks

Because they’re purpose-built, teams don’t need to assemble a patchwork of tools or manage complex model training.

Securely Connect AI Models to Internal Data

To be useful, AI models need access to internal metrics, logs, configuration files, and historical data. Teams should set up secure data pipelines that allow AI tools to learn from this information without exposing sensitive systems.

Encryption, data access policies, and audit trails are key to building trust and security.

Start with Low-Risk, High-Impact Areas

Teams should begin with isolated use cases that won’t disrupt production if the AI gets something wrong. Examples:

- Generating non-critical IaC templates

- Recommending cloud cost optimizations

- Writing test cases for low-priority code

Success here builds confidence and allows teams to expand AI usage gradually.

Governance, Access Controls, and AI Ethics

AI recommendations must be reviewed and validated—especially in security-sensitive environments. Teams need:

- Clear ownership of AI outputs

- Access control rules for AI-generated scripts

- Documentation to explain AI decisions

This level of governance helps prevent misuse and builds transparency for both engineers and non-technical stakeholders.

Key Challenges and How to Prepare for Them

To make the most of using AI in DevOps, it’s important to recognize and plan for potential difficulties, from trust issues to technical integration.

Trust and Accuracy in AI Output

AI-generated code, recommendations, or alerts must be verified before use. Incorrect configurations can create outages or introduce vulnerabilities.

Use peer reviews, linting tools, and policy validation steps to double-check AI suggestions.

Integrating with Existing Tooling

Most companies already have CI/CD pipelines, observability stacks, and IaC repositories. AI tools must integrate smoothly into these environments.

APIs, plugins, and SDKs help embed generative AI capabilities without overhauling your architecture.

AI Cost, Vendor Lock-In, and Licensing

AI platforms vary widely in how they charge—some bill per API call, others per user or per environment. Before committing, evaluate:

- Total cost of ownership

- Data portability

- Exit strategies

Avoid platforms that make it hard to extract or reuse your own infrastructure code and data.

Team Enablement and Cultural Shifts

Adopting generative AI in DevOps requires change management. Developers may distrust AI suggestions or feel their roles are being replaced.

Leadership must emphasize that AI augments human expertise—not replaces it—and provide training, guardrails, and space for experimentation.

The ROI of Generative AI in DevOps: What the Numbers Say

When it comes to justifying investment, tangible results matter most. The following figures highlight how generative AI is driving measurable gains in DevOps performance.

Performance Benchmarks from Early Adopters

Companies that adopted generative AI in their DevOps stacks have reported:

- 40–60% reduction in infrastructure setup time

- 30% fewer failed deployments

- 20% improvement in build pipeline speeds

These numbers are not just technical wins—they support faster feature releases and more reliable product experiences.

Business-Level Outcomes

For CMOs, the business impact includes:

- Shorter go-to-market timelines

- Fewer delays from technical issues

- Improved brand perception due to higher uptime

These outcomes make AI in DevOps a strategic asset—not just a technical upgrade.

Looking Ahead: Where Generative AI and DevOps Are Headed

The future of generative AI in DevOps promises even greater automation and intelligence, reshaping how teams build and manage cloud environments.

AI Agents and Autonomous Ops

Next-generation systems will include AI agents that:

- Monitor telemetry

- Trigger infrastructure changes

- Auto-resolve issues

This moves DevOps toward fully autonomous operations—reducing toil and improving uptime.

The Convergence of DevOps and MLOps

As AI products become mainstream, DevOps and MLOps are converging. Teams will need unified workflows to deploy both application code and machine learning models.

This will require shared tooling, governance frameworks, and cross-functional skill sets.

Conclusion

Generative AI in DevOps is not a futuristic concept—it’s a current, practical toolset that improves velocity, quality, and efficiency across the software delivery lifecycle. It enables faster campaign support and better product reliability. For DevOps teams, it streamlines workflows and enhances security. And for the business as a whole, it reduces cost and technical friction. The sooner organizations begin experimenting with DevOps and AI, the faster they’ll see measurable results—from both a technical and commercial perspective.

FAQ

What is generative AI in DevOps?

It refers to AI models that generate code, configurations, and decisions to automate tasks in infrastructure management, CI/CD, and security.

How can a DevOps team take advantage of artificial intelligence?

By automating repetitive tasks, enhancing deployment pipelines, predicting system failures, and improving code quality—all with AI-generated support.

Is it safe to trust AI with production infrastructure?

Not blindly. AI output should always be validated. Trust increases as models are fine-tuned to your environment and outputs are reviewed consistently.

Are these tools expensive to run?

Costs vary. Some tools offer cost-saving insights that outweigh usage fees. Evaluate platforms based on ROI—not just licensing models.

Which tools support generative AI in DevOps today?

Platforms like Microtica, GitHub Copilot for IaC, Harness, and Azure DevOps are integrating generative AI into infrastructure, deployment, and monitoring pipelines.

Subscribe to receive the latest blog posts to your inbox every week.

*By subscribing you agree to with our Privacy Policy.

Relevant Posts